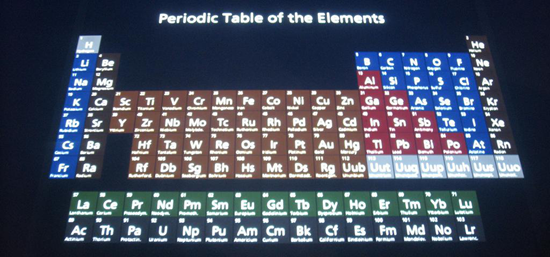

Chemistry as we learn it in school can be a pretty dry subject that involves memorizing a lot of numbers and chemical reactions. But that doesn’t have to be the case, and it turns out that there are a lot of fascinating stories about how we first learned about the elements on the periodic table. Chemistry has a lot of neat stuff buried in its history.

10. Seven Elements From One Mine

Photo credit: Svens Welt

Ytterbium, yttrium, terbium, and erbium - they’re a mouthful to say, and there’s a reason that they’re listed together. All four were found in a rather unlikely way, taking their name from the quartz quarry in Ytterby, Sweden, where they were unearthed. The quarry is known as something of a gold mine when it comes to documenting new elements. Gadolinium, holmium, lutetium, scandium, tantalum, and thulium were also found there. If it seems like that might cause confusion, it absolutely did.

In 1843, a Swedish chemist named Carl Gustaf Mosander took gadolinite and separated it into the rare-Earth materials yttria, erbia, and terbia. Once he shared his findings, though, something got lost in translation, and erbia became known as terbia, while terbia was called erbia. In 1878, the newly christened erbia was further broken down into two more components - ytterbia and another erbia. It was surmised that ytterbia was a compound that included a new element, which was named ytterbium. This compound was separated into two elements, neoytterbium and lutecium. Are you confused yet? Would it help that neoytterbium got another name change and became just plain old ytterbium again, and lutecium became luteium?

The end result of what came out of the Ytterby quarry was a whole handful of elements that were found for a couple of reasons. The mine was ripe for the picking, thanks to glacier activity during the last ice age. There was also a rather odd coincidence going on around the same time: The mine was originally opened to mine feldspar, which had recently been highlighted as a key component in the creation of porcelain. Making porcelain had been a closely guarded secret of the Far East, until some alchemists got involved. The mine at Ytterby had opened to help with the demand for porcelain, and chemist Johan Gadolin (who gave his name to some of the rocks) was working at the mine because of his friendship with an English porcelain maker.

9. Barium Was Mistaken For Witchcraft

Photo credit: Matthias Zepper

Today, barium is a pretty common element that’s used to make paper whiter, paints brighter, and as a colorant to block X-rays and make problems with the digestive system more noticeable in scans. In the Middle Ages, it was a well-known substance, but not as we think of it today. Smooth stones, found mostly around Bologna, Italy, were popular with witches and alchemists because of their tendency to glow in the dark after only being exposed to light for a short amount of time.

In the 1600s, it was even suggested that the so-called Bologna stones were actually philosopher’s stones. They had mysterious properties: Heating them up would cause them to glow a strange, red colour. Expose them to sunlight for even a few minutes, and they would glow for hours. A shoemaker and part-time alchemist named Vincentius Casciorolus experimented on the stone, trying everything from using it to turn other metals into gold to creating an elixir that would make him immortal. He failed, sadly, and for almost another 200 years, the rock was nothing more than an odd curiosity that was associated with the mysteries of witchcraft.

It wasn’t until 1774, when Carl Scheele (of Scheele’s green fame), was experimenting with Earth metals, that barium was recognized as something independent. Originally calling it terra ponderosa, or “heavy earth,” it wouldn’t be for another few decades until an English chemist would finally isolate and identify the element that made the witches’ stones glow.

8. Coincidental Helium

Scientific history is filled with instances where people race to be the first to document or explain something, but finding helium ended in a bizarre tie.

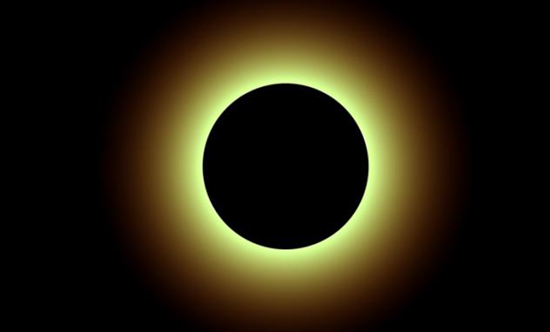

The scientific community was new at studying the emissions from the Sun in the late 19th century, and they thought that the best (and perhaps only) way to do so was to look at it during an eclipse. In 1868, Pierre Jules Cesar Janssen set up shop in India, where he watched the solar eclipse and saw something new - a yellow light that was previously unknown. He knew that he needed to study it more in order to determine just what this yellow light was, so he ended up building the spectrohelioscope to look at the Sun’s emissions during the day.

In a bizarre coincidence, an English astronomer was going the same exact thing at the same exact time, half a world away. Joseph Norman Lockyer was also working on looking at the Sun’s emissions, also during the day, and he also saw the yellow light.

Both men wrote papers on their findings, sending them off to the French Academy of Sciences. The papers arrived on the exact same day, and although they were first rather ridiculed for their work, it was later confirmed, and the two astronomers shared credit for the find.

7. The Great Name Debate

A lot of the names and symbols of the elements don’t seem to match, but that’s usually because the symbol comes from a Latin translation, like gold’s “Au.” The exception is tungsten, whose symbol is “W.”

The difference comes because the element had two names for a long time. The English-speaking world called it “tungsten,” while others called it “wolfram” for a very cool reason: Tungsten was first isolated from from the mineral wolframite, and in some circles, it retained its old name until 2005. Even then, it didn’t give up without a fight, with Spanish chemists in particular arguing that “wolfram” shouldn’t have been dropped off the official information for tungsten.

In fact, in most languages other than English, “wolfram” was still used, and it’s the name that the men who found it, the Delhuyar brothers, requested be used. The word comes from the German word for “wolf’s foam,” and its use dates back to the early days of tin smelting. Before we knew anything about elements, people working the smelters recognized a certain mineral by the way it foamed when they melted it. They called the mineral “wolf’s foam” because they believed that its presence consumed the tin they were trying to extract from the mineral in the same way a wolf would consume its prey. Today, we know it’s the high tungsten content in the ore, but chemists fought long and hard to keep their name. They lost, but the symbol for tungsten still remains a “W.”

6. Neon Lights Predate Neon

Broadway and Las Vegas certainly wouldn’t be the same without the bright neon lights that have made them famous, but oddly, the creation of neon lights is an old one - one that predates knowledge of the element.

Neon is one of the noble gases and one of only six elements that’s inert. Odourless, colourless, and almost completely nonreactive, neon was found along with other noble gases argon and krypton. In 1898, chemists Morris Travers and William Ramsay were experimenting with the evaporation of liquified air when they documented the new gases. Neon was first used in 1902 to fill sealed glass tubes and create the garish, unmistakeable advertising signs that we now see everywhere.

They weren’t the first, though; what we now know as neon signs date back to the 1850s, when Johann Heinrich Wilhelm Geissler made the first neon lights. The son of a glass maker, Geissler pioneered the vacuum tube, along with the vacuum pump and the method of fitting electrodes inside the glass tubes. He experimented with a number of different gases and produced many different kinds of colours, while neon is only reddish-orange. Neon’s popularity came in part because of the colour it gives off, and also because it’s incredibly long-lasting, remaining colourful for decades.

5. Aluminium Was More Valuable Than Gold

Chemists knew that aluminium was around for about 40 years before they had the technology to isolate it. When they finally did so in 1825, it became insanely valuable. Originally, a Danish chemist developed the method for extracting only the tiniest bit, and it wasn’t until 1845 that the Germans figured out how to create enough of it that they could study even its most basic properties. In 1852, the average price of aluminium was around US$1,200 per kilogram. Today, that’s the equivalent of about US$33,650.

It wasn’t until the 1880s that another process was developed that would allow for the more widespread use of aluminium, and until then, it remained incredibly valuable. The first president of the French Republic, Napoleon III, used aluminium dinner settings for only his most valued guests. The regular, run-of-the-mill guests were seated with gold or silver tableware. The King of Denmark wore an aluminium crown, and when it was chosen as the capstone of the Washington Monument, it was the equivalent of choosing pure silver today. Upscale Parisian ladies wore aluminium jewellery and used aluminium opera glasses to demonstrate just how wealthy they were.

Aluminium also formed the backbone of visions for the future. It was the biggest attraction at the Parisian Exposition of 1878, and it became the material of choice for writers like Jules Verne when they were building their grand visions of the future. Aluminium was going to be used for everything from entire city structures to rocket ships.

Of course, the value of aluminium took a steep dive when new ways were developed to create it, and it was suddenly everywhere.

4. Fluorine’s Deadly Challenge

The first observations of fluorine came from the 1500s, with a German mineralogist who described it as a material that served to lower the melting point of ore. In 1670, a glass worker accidentally found that fluorspar and acids would react and used the reaction to etch glass. Isolating fluorine proved much more difficult - and deadly.

It was our old friend Carl Scheele who determined that it was something in the fluorspar that was causing the reaction, and in 1771, the hunt for fluorine began in earnest. Before it was finally isolated by Ferdinand Frederic Henri Moissan in 1886 (earning him a Nobel Prize), the process left quite a trail of illness and injury. Moissan himself was forced to stop his work four times as he suffered from, and slowly recovered from, fluorine poisoning. The damage done to his body was so great that it’s generally thought that his life would have been incredibly shortened by it had he not died of appendicitis only a few months after accepting the Nobel Prize.

Humphry Davy’s attempts would leave him with permanent damage to his eyes and fingers. A pair of Irish chemists, Thomas and George Knox, also worked extensively on trying to isolate fluorine, with one dying and the other left bedridden for years. A Belgian chemist also died in his attempts, and a similar fate befell French chemist Jerome Nickels. In the 1860s, George Gore’s work resulted in a few explosions, and it was only when Moissan stumbled onto the idea of lowering the temperature of his sample to –23 degrees Celsius (–9 °F) and then trying to isolate the highly volatile liquid that fluorine was successfully documented for the first time.

3. The Element Named For The Devil

Nickel is incredibly common today, used as an alloy and lending its name to a US coin (that’s really only about 25 percent actual nickel). The name is something of an oddity, though. While many elements are named for gods and goddesses, or their most desirable characteristic, nickel is named for the Devil.

The word “nickel” is short for the German word kupfernickel. Its use dates back to an era when copper was incredibly useful, but nickel wasn’t the least bit desirable. Miners, always a superstitious lot, would often find ore veins that looked like copper but weren’t. The worthless ore veins came to be called kupfernickel, which translates to “Old Nick’s copper.” Old Nick was a name for the Devil, and he was much more than that to the miners who were labouring deep underground. The belief was that Old Nick put the fake copper veins there on purpose, partially to make the miners waste their time and also to guide them in a direction that could be deadly. Every day was potentially deadly, after all, and miners have long believed in the presence of Earth spirits who can either help or kill the interlopers sent into their underground domain.

Pure nickel was first isolated in 1751 by Swedish chemist and mineralogist Axel Fredrik Cronstedt, and the name that the miners had been calling the worthless ore for centuries stuck.

2. The Bizarre Unveiling Of Palladium

Photo via Wikimedia

Palladium was documented by an incredibly under-studied genius named William Hyde Wollaston. Wollaston, who had a medical degree from Cambridge and only turned to chemistry after a long career as a doctor and inventor of optical instruments, isolated palladium and rhodium and created the first type of malleable platinum. His methods for revealing his finding of palladium to the world make for the best story, though.

After establishing a partnership with the financially well-off Smithson Tennant, Wollaston got access to a material that needed to be smuggled into England through Jamaica from what’s now Colombia - platinum ore. In 1801, he set up a full laboratory in his back garden and got to work.

His journals from 1802 talk about his new element, originally called “ceresium,” renamed “palladium” shortly afterward. Knowing that there were other researchers right behind him in their work, he had to go public with his findings. However, he wasn’t quite ready to present it formally, so he took a handful of his new element to a store on London’s Gerrard Street in Soho. He then handed out a bunch of flyers advertising a wonderful new type of silver that was up for sale. Chemists went rather mad for the whole idea, with a number of them trying to replicate the material and failing to do so. With everyone denouncing the idea that it was anything but some kind of alloy, he anonymously offered a reward for anyone who could prove it. Of course, no one could.

In the meantime, Wollaston kept working, found rhodium, and published a paper on it. That was in 1804; in 1805, he was ready to come forward with palladium and wrote a paper on his earlier find. Appearing before the Royal Society of London, he gave a talk on the properties of this strange new material, before summing it up with an admission that he had found it earlier and needed time to explore all of its properties to his satisfaction before making it official.

1. Chlorine And Phlogiston

Belief in a substance called phlogiston set back the documentation of chlorine for decades.

Introduced by Georg Ernst Stahl, the theory of phlogiston states that metals were made up of the core being of that metal, along with the substance phlogiston. Starting in the 18th century, chemists used it to explain why some metals change substance. When iron rusts, for example, it loses its iron-ness and only has its phlogiston left. The theory was an ever-evolving one, and by the 1760s, it was believed that the substance was “inflammable air,” also known as hydrogen. Other elements were referred to in terms of the theory, too. Oxygen was dephlogisticated air, and nitrogen was phlogiston-saturated air.

In 1774, Carl Scheele first produced chlorine using what we now call hydrochloric acid, and he described it in terms that we recognize pretty easily. It was acidic, suffocating, and “most oppressive to the lungs.” He recorded its tendency to bleach things and the immediate death that it brought to insects. Rather that recognizing it as a completely new element, though, Scheele believed that he had found a dephlogisticated version of muriatic (hydrochloric) acid. A French chemist argued that it was actually an oxide of an unknown element, and that wasn’t the end of the arguing. Humphry Davy (whom we mentioned in his ill-fated quest for fluorine), thought it was an oxygen-free compound. This was in complete opposition to the rest of the scientific community, which was convinced that it was a compound involving oxygen. It was only in 1811, well after its first isolation and the debunking of the phlogiston theory, that Davy confirmed it was an element and named it after its colour.