While some robotics engineers looked to the animal kingdom for inspiration, these robots are trying to show off their human side.

1. DeeChee

DeeChee, whose name is a play on the Latin phrase "to speak," is a robot that learns to talk. Well, sort of. But there's no question that DeeChee is helping its creator, Caroline Lyon at the University of Hertfordshire in the U.K., investigate how humans acquire language.

DeeChee starts out spewing random syllables. However, as it listens to humans trying to teach it colours and shapes, the robot eventually starts to learn words. Or, at least what sound like words. DeeChee doesn't associate any meaning to the words it learns. Instead, at the beginning of each 8-minute session, DeeChee randomly selects a handful of syllables from its memory. After listening to its teacher, it identifies the syllables that pop up most frequently and begins to weed out the ones that don't.

2. Motormouth Robot KTR-2

Most robots "talk" or produce sounds via speakers and software. But Hideyuki Sawada from Kagawa University built a mouth robot that tried to duplicate way we humans produce sounds through our voice boxes. Inside the robot, a compressor passes air through an artificial set of vocal chords, which control both the volume and pitch of the sound. From there, the air moves along a long segmented tube and finally exits out of a flexible mouthpiece.

The resulting sounds can be hard to understand, but Sawada has found an interesting use for his mechanical voice box: The robot assists deaf students in learning how to pronounce vowels. The students first look at an MRI image of their own mouth and tongue movements when they speak. The students then look at the robot mouth as a reference point and try to mimic its configuration. The students don't become perfect at pronouncing the vowels, but they show a marked improvement after working with the bot.

3. Hugvie

Hugvie looks less like a robot and more like an amorphous (but friendly) ghost. Osaka University's Hiroshi Ishiguro envisions the robot as a way to make phone conversations more intimate. Users insert their cell phone in the robot's head and then talk to it - and embrace it as if it were a real person.

In addition to the soft cushioning, Hugvie has two small vibrating motors that simulate a heartbeat. The motors react to the caller's voice as well. If Hugvie detects the caller talking at a higher volume or a faster pace, the motors will speed up and more accurately reflect the caller's state of excitement. Ishiguro ultimately sees two Hugvies synced with one another so the movements of one caller's gestures are transferred and mimicked by the receiving robot. [More information (include video)]

4. DART

DART, or the Dexterous Anthropomorphic Robotic Typing hand, is good at its namesake job. The skeletal hand consists of 19 motors that control the movements of both fingers and wrist. Once given a string of words to type out, DART shifts between three different hand configurations to type out each word at a rate of approximately 20 words per minute.

According to Sashank Priya, a professor at Virginia Tech, DART is just one part of the bigger picture of creating a better humanoid robot. His team has also developed realistic skin and facial expressions in the process. "At this point, we have the full body, but the biggest challenge is to integrate it into a single robot." [More information (include video)]

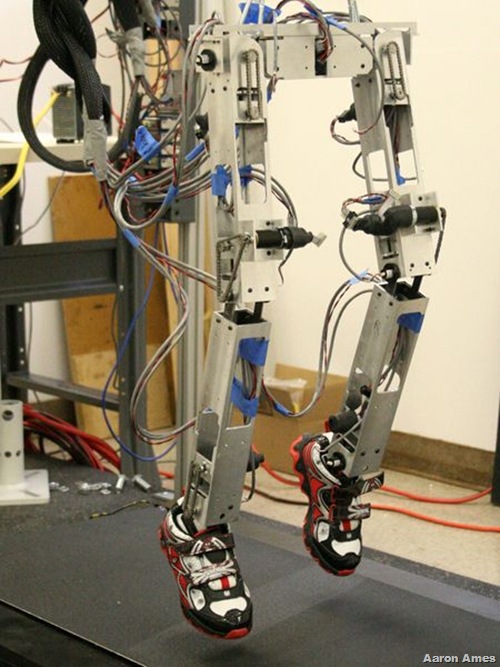

5. AMBER

Walking on two legs is no easy feat. Making a robot that can do the same seems like it should require an intricate network of sensors and motors. Aaron Ames at the Texas A&M Bipedal Experimental Robotics lab (AMBER for short) opted for a simpler and less bulky approach. The robot itself consists of only a couple of sensors along the legs to detect joint angles and track foot position.

By sticking to a (relatively) simple design, Ames' discovered that the robot started exhibiting behaviours that it wasn't explicitly programmed to do. AMBER can handle a variety of obstacles, whether it's walking across uneven terrain or being pushed around by its experimenters. According to Ames, "We don't plan for any of this. It's really like a human reflex."

No comments:

Post a Comment

Please adhere to proper blog etiquette when posting your comments. This blog owner will exercise his absolution discretion in allowing or rejecting any comments that are deemed seditious, defamatory, libelous, racist, vulgar, insulting, and other remarks that exhibit similar characteristics. If you insist on using anonymous comments, please write your name or other IDs at the end of your message.